Wood Powder Volume Calculation using Point Cloud Data and AI

Background & Objectives

Measuring the volume of a pile of sediment, crops, materials, etc. is a labor-intensive task. It will be very helpful if AI can be used to provide easy-to-implement services to companies suffering from labor shortages. We worked on the development of such AI using wood powder as an example.

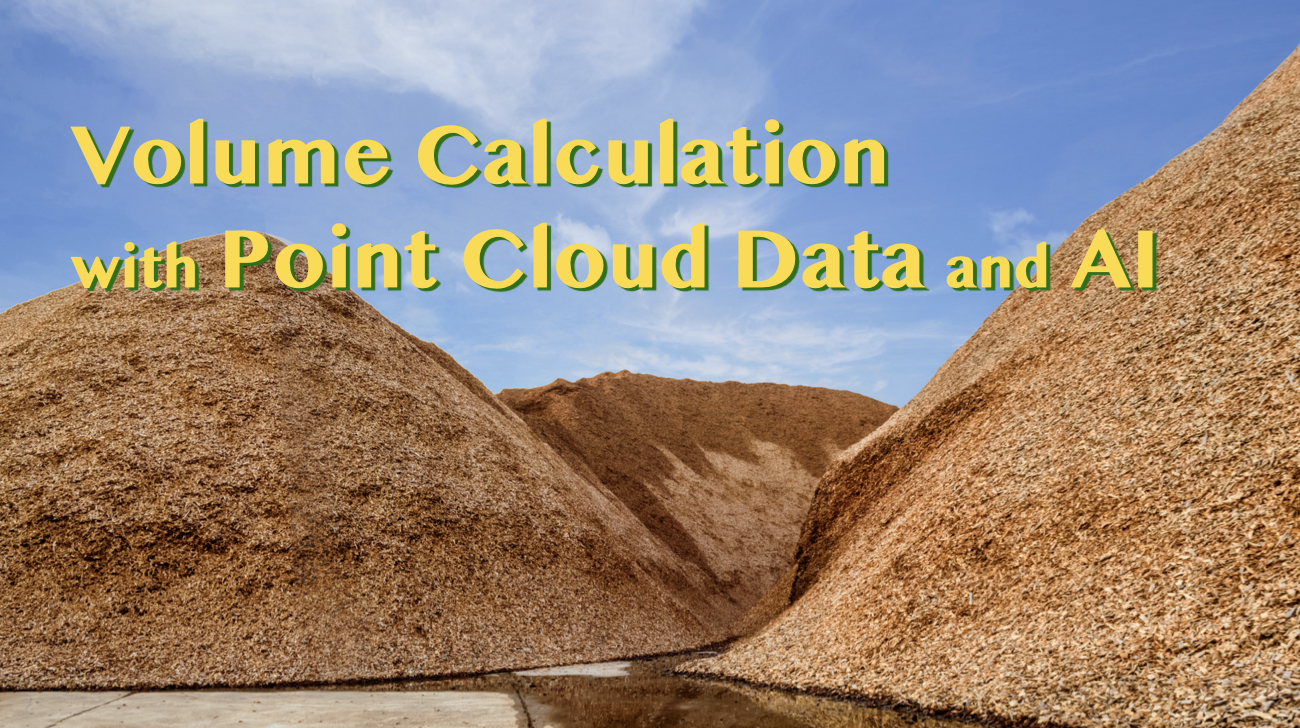

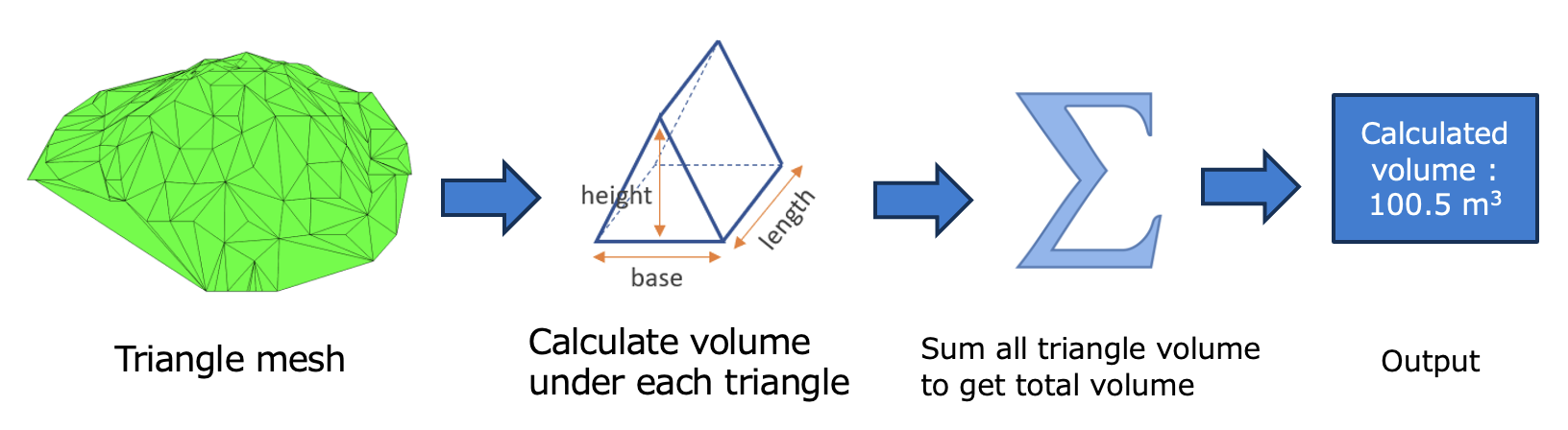

Fig 1: volume calculation

Fig 1: volume calculation

Fig 1 represents the overview of volume calculation. The AI model takes input wood powder data (point cloud data) and provides volume as output.

Point Cloud

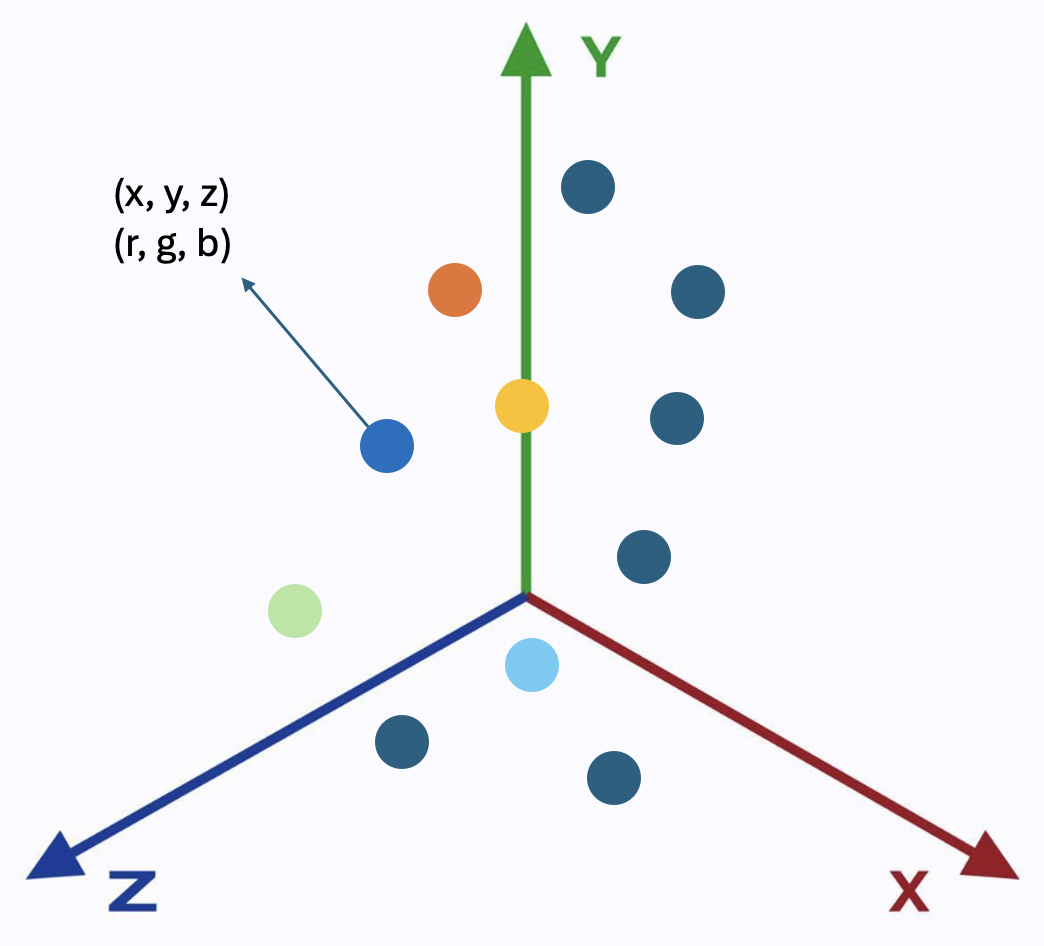

Point clouds represent a basic type of 3D model, consisting of numerous discrete points arranged in three-dimensional space. Each point in the cloud is defined by its coordinates on the X, Y, and Z axes. Often, these points also carry extra information such as color, recorded in RGB format, and luminance values, which indicate the brightness of each point.

Fig 2: point cloudIn Fig 2 the points are represented in 3D coordinate systems. The points hold x, y, z coordinates and color values. The most common file format to store point cloud data is a ‘.las’ file format. This binary file format maintains information specific to LiDAR without the loss of information.

Fig 2: point cloudIn Fig 2 the points are represented in 3D coordinate systems. The points hold x, y, z coordinates and color values. The most common file format to store point cloud data is a ‘.las’ file format. This binary file format maintains information specific to LiDAR without the loss of information.

Point clouds are created by performing a scan of an object or structure. Scans are completed by using either a laser scanner or through a process called photogrammetry.

Laser Scanners

Laser scanners, particularly those using the Light Detection and Ranging (LiDAR) technique, function by emitting numerous light pulses (over 160,000 per second) onto an object's surface and measuring the time it takes for each pulse to bounce back. Each pulse helps determine the exact location of points on the object. With about 15 pulses per one-meter pixel, these points collectively form a point cloud. After collecting and processing these measurements, the resulting data is compiled into point cloud data, providing a precise digital representation of the object.

Photogrammetry

It is the process of creating measurements from pictures. It involves taking multiple overlapping photographs from different angles and then using software to analyze these images to detect common points and reconstruct the shape and position of the objects in three dimensions. The process can be divided into several stages: capturing the images, processing the images to create a photogrammetric model, and refining the model to enhance accuracy and detail.

Data Analysis

To train the AI model we need to prepare data. In this section, we will describe the data acquisition process, how to extract meaningful information from the file and how to visualize point cloud data.

Data Acquisition

The wood powder data is in fact 3D point cloud data. For each wood powder pile, the data is collected using iPhone 12 Pro device which has built-in Lidar technology. Scaniverse app is used to collect and save the data in .las format.

Fig 3: Scaniverse appFig 3 shows snippet of Scaniverse app which is used to collect and save the data in .las format. We can also use the app to crop target portion from data.

Fig 3: Scaniverse appFig 3 shows snippet of Scaniverse app which is used to collect and save the data in .las format. We can also use the app to crop target portion from data.

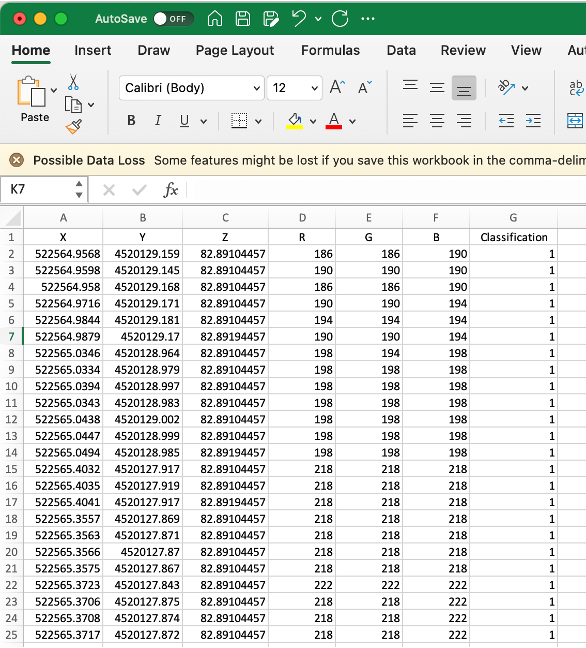

Data Extraction

The 3D point cloud data is saved in ‘.las’ (laser) format. The ‘laspy’ python lib is used to extract necessary data from las file. The data in las file has several properties like x, y, z coordinates of each point, RGB color value of each point, classification of each point etc. The classification value of each point is very important. During annotation we can assign class labels to the points and train the model to segment the points into groups.

Data Visualization

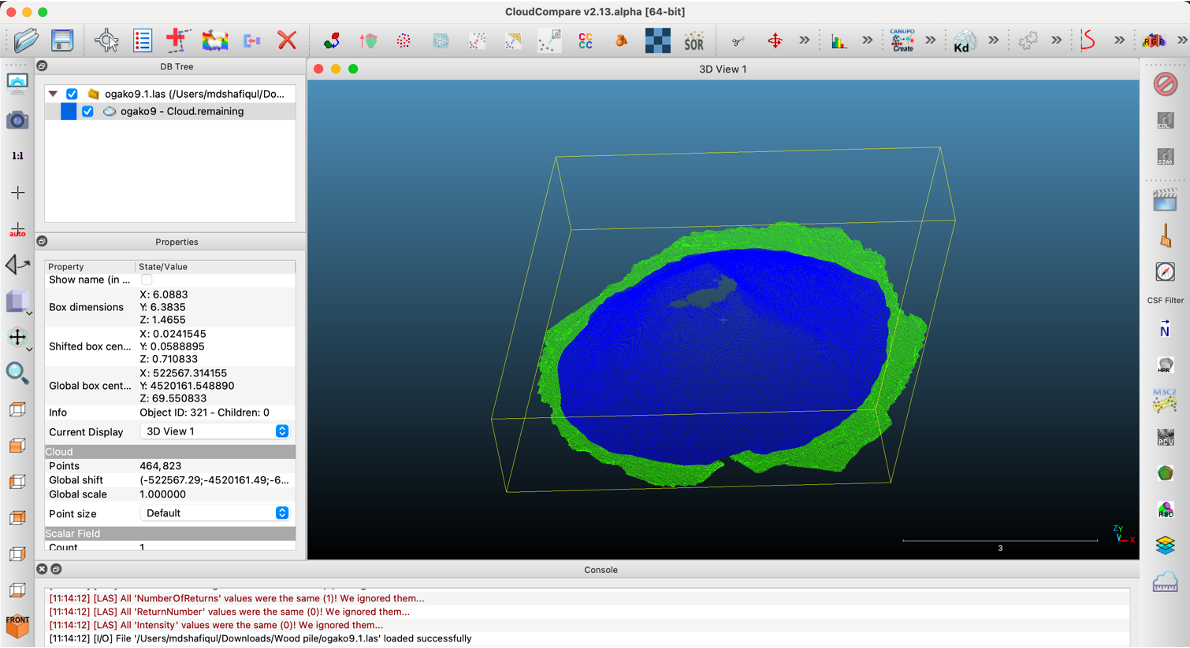

Each point cloud file contains lots of points (~ 300000). To visualize point clouds there are lots of tools available i.e.; CloudCompare, Pointly.

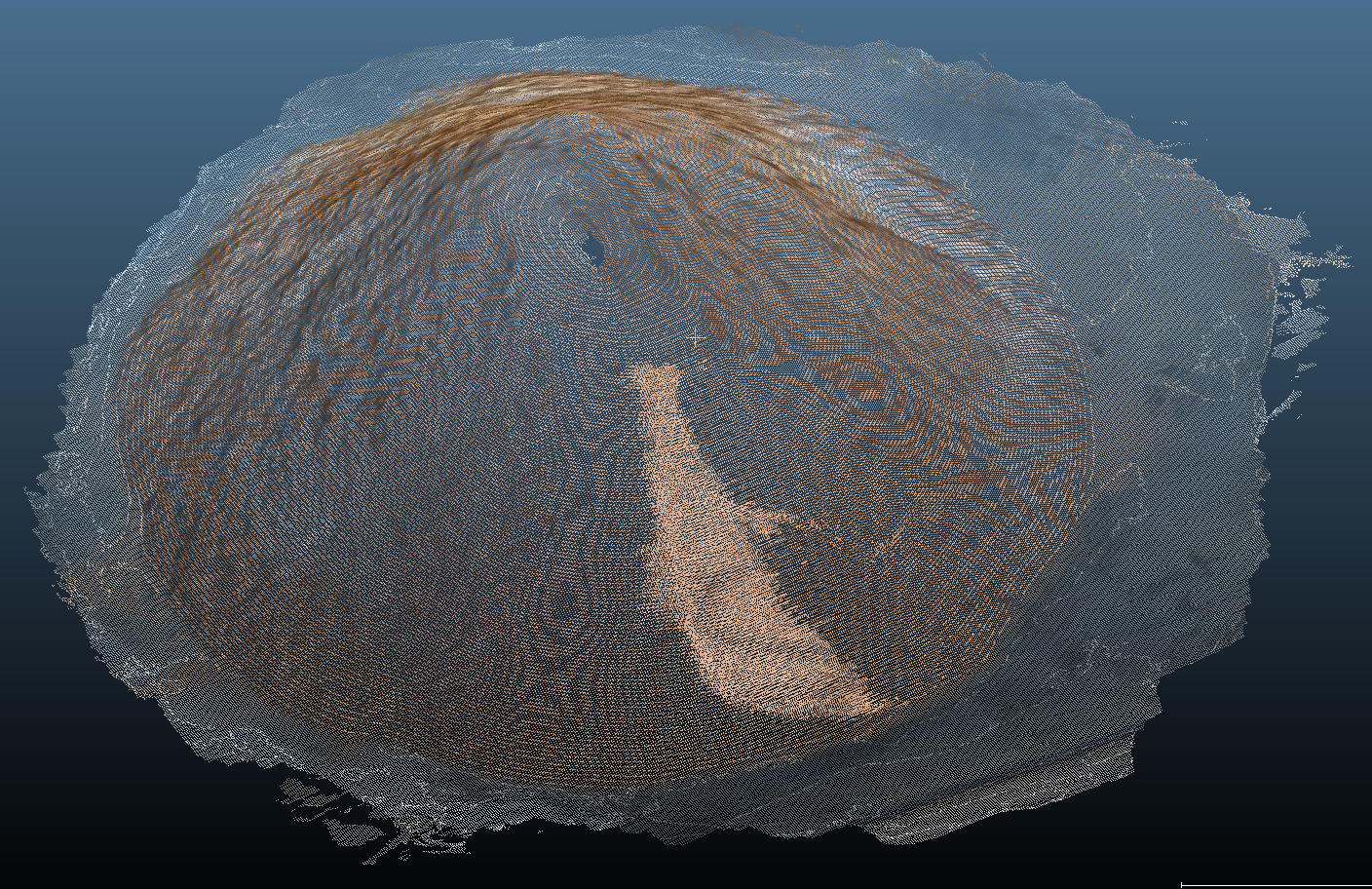

Fig 4: visualization of wood powder point cloud data

Fig 4: visualization of wood powder point cloud data

Fig 4 shows visualization of 3D point cloud data. As CloudCompare is quite popular and widely used for handling 3D point cloud data, here we have used it to visualize our wood powder data.

Annotation

For training any model annotation is quite important. To train our model we need to annotate 3D point cloud data. In our target data there are wood powder data, floor data and sometimes other object data (i.e wall, car). We have assigned label ‘0’ label for wood powder data (target) and ‘1’ for non- target data (floor, car, wall etc.)

Annotation Tools

There are many tools for annotating 3D point cloud data. For example, CloudCompare (free), Pointly, LabelCloud, VRMESH, CVAT etc. We have used CloudCompare tool for annotation as it is free for commercial usage and quite popular.

Annotation Process

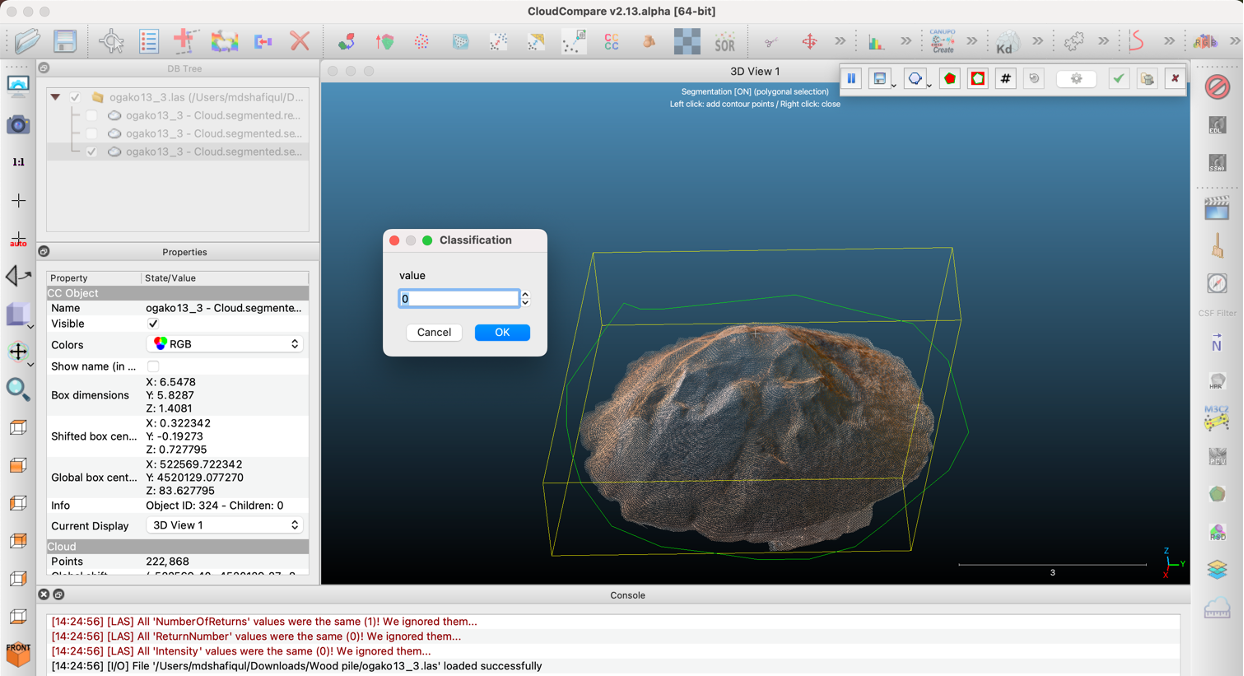

To annotate point cloud data using CloudCompare tool requires several steps. Here is a step by step procedure to annotate wood powder data.

1. Import point cloud data in CloudCompare

2. Draw segment line around target region

3. Assign label for the target region (Fig 5)

Fig 5: labeling cropped region

Fig 5: labeling cropped region

4. Crop the segmented region and save it

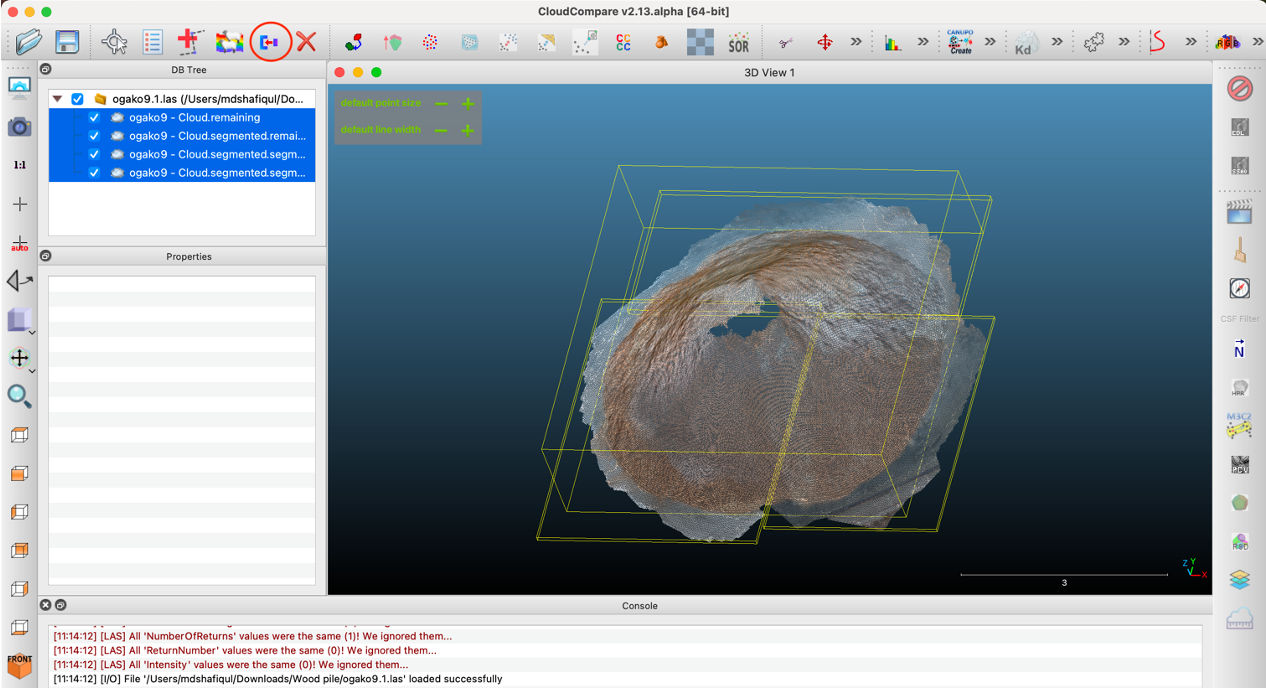

5. Select all the segmented regions (Fig 6)

6. Click “merge multiple clouds” button (marked with red circle). A single merged file will be created

Fig 6: merge segmented regions

Fig 6: merge segmented regions

7. After merging all segmented regions, it will show each segment in different color based on ”Classification” value (Fig 7, 8)

Fig 7: output of annotation

Fig 7: output of annotation

Fig 7: output of annotation

Fig 7: output of annotation

8. Save the annotated point cloud in any format (.las / .csv etc.)

PointNet Model

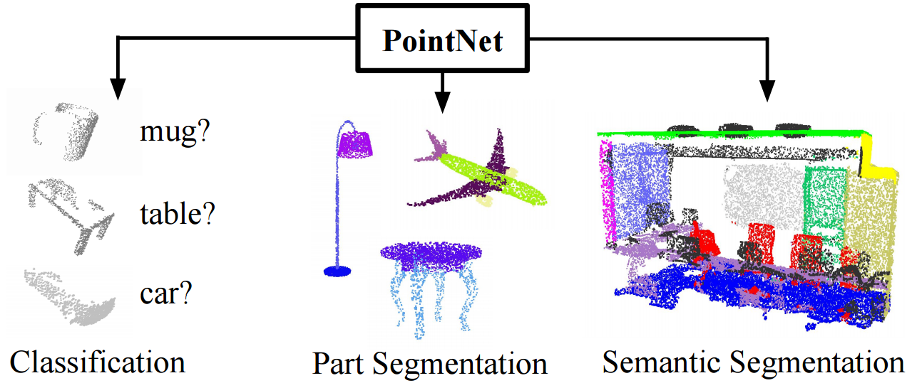

For calculating volume of wood powder, we need to do 3D semantic segmentation which means assigning a class label to every 3D point. There are many models that work on point cloud data. For example, PointNet, RangeNet, 2DPass etc. Some models require both point cloud data and input image whereas some models can work only on point cloud data.

Fig 9: PointNet

Fig 9: PointNet

PointNet is a novel architecture that can directly work on point cloud data. It does not need any external image of the input. Fig 9 shows the applicability of PointNet model in different areas (i.e classification segmentation). This blog will discuss an example of using the PointNet model for our wood powder volume measurement task.

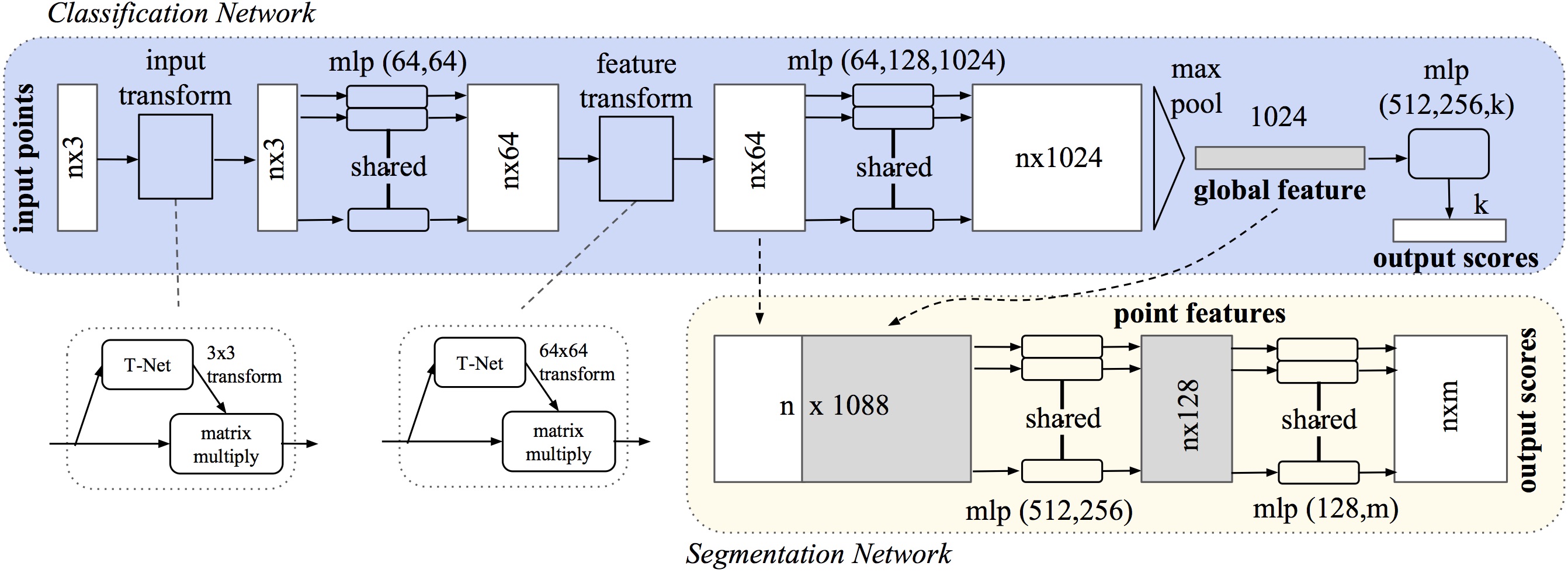

PointNet is a point-based architecture, and it addresses challenges related to the representation and analysis of unstructured point cloud inputs. Here is a brief overview of PointNet model architecture.

Fig 10: PointNet Architecture

Fig 10: PointNet Architecture

Fig 10 represents overall architecture of PointNet model. The classification network processes a set of n points by performing input and feature transformations, and aggregates the point features using max pooling to generate classification scores for m classes. The segmentation network builds on this by merging both global and local features to assign scores to individual points. The architecture uses multi-layer perceptrons (MLP), with layer sizes indicated in parentheses. All layers are equipped with batch normalization and ReLU activation functions. For the classification network, dropout layers are included in the final MLP to promote model robustness by preventing overfitting.

PointNet can effectively learn to recognize point clouds in any orientation, eliminating the need for training with multiple orientations. This capability is enabled by a submodule within PointNet known as T-Net, as illustrated in the figure above.

The Backbone of PointNet integrates with the T-nets and serves as the main component of the network, excluding the classification head. It outputs Global Features for classification tasks, or a combination of Local and Global Features for segmentation purposes. The number of Global Features used in the paper was 1024.

The segmentation head processes the concatenation of learned local and global features, offering a comprehensive representation of the input Point Cloud. The global features are replicated n times for concatenation with the local features. The architecture consists of a sequence of shared MLPs (Multi-Layer Perceptrons) that preserve the original n dimensions. The final layer maps each point to one of m potential classes. Further details about architecture can be found in original paper.

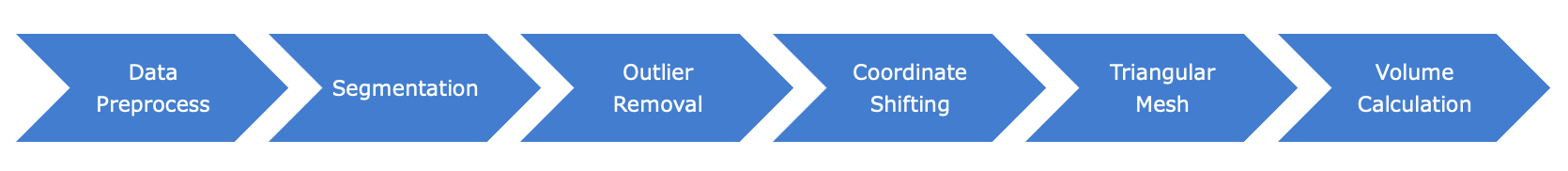

Volume Measurement Pipeline

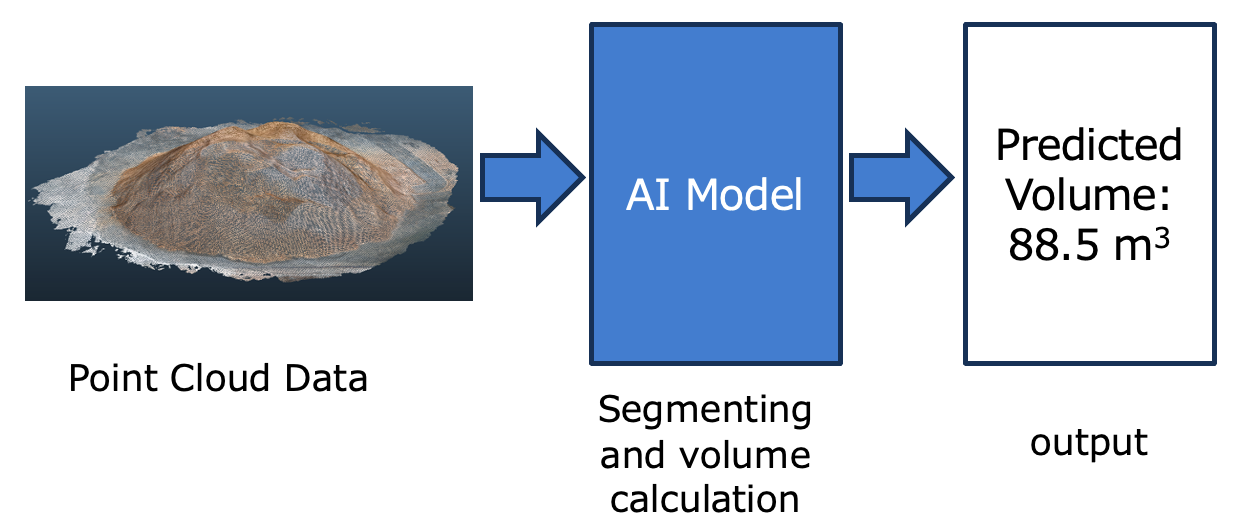

To measure volume from point cloud data we have to follow several steps. These are data preprocess, segmentation, outlier removal, coordinate shifting, triangular mesh generation and calculating volume from mesh.

Fig 11: pipeline

Fig 11: pipeline

Fig 11 shows all the steps in volume estimation pipeline. In data preprocess step we make multiple samples from each point cloud file using non overlapping sliding window technique. Then we train the AI model to segment point cloud data into multiple segments. It segments the data into 2 parts, target (wood powder points) and non-target (floor, wall points). After segmentation post processing is done by removing outliers. Then the point cloud’s X and Y coordinates are shifted so that the whole point cloud center coordinate becomes (0, 0) based. After coordinate shifting triangular mesh is generated from segmented point cloud data. Finally, we calculate volume of each triangular mesh and sum up together to get whole wood pile’s volume. Some of the steps in volume measurement pipeline are briefly described below.

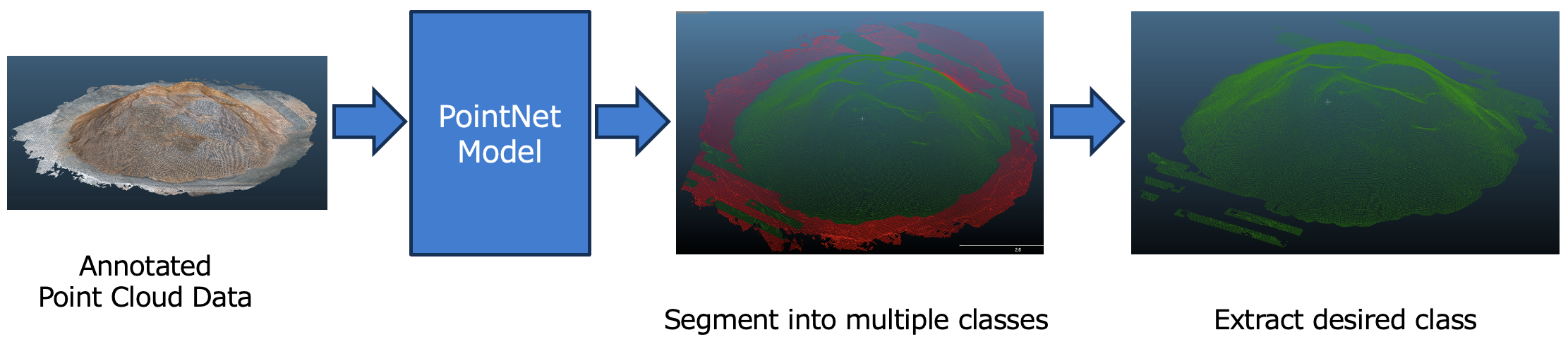

Segmentation

To measure volume of wood powder we need to separate the target wood powder points from non-target points (i.e. floor, wall). To do the separation we need to do semantic segmentation. In this step, we will train the PointNet model on our data for doing semantic segmentation. The model will take point cloud sampling data as input and then segment them into target classes. It means to assign class label to each point. Fig 12: Segmentation

Fig 12: Segmentation

Fig 12 shows segmentation of target data non target data. The two colors in above figure represent target and non-target segments. Red color means non-target portion which contains points of floor, wall etc. On the other hand, green color means target segments which contains wood powder points. We used mIoU as a metric to measure segmentation performance of the AI model. On average the mIoU on test data is 86.95%.

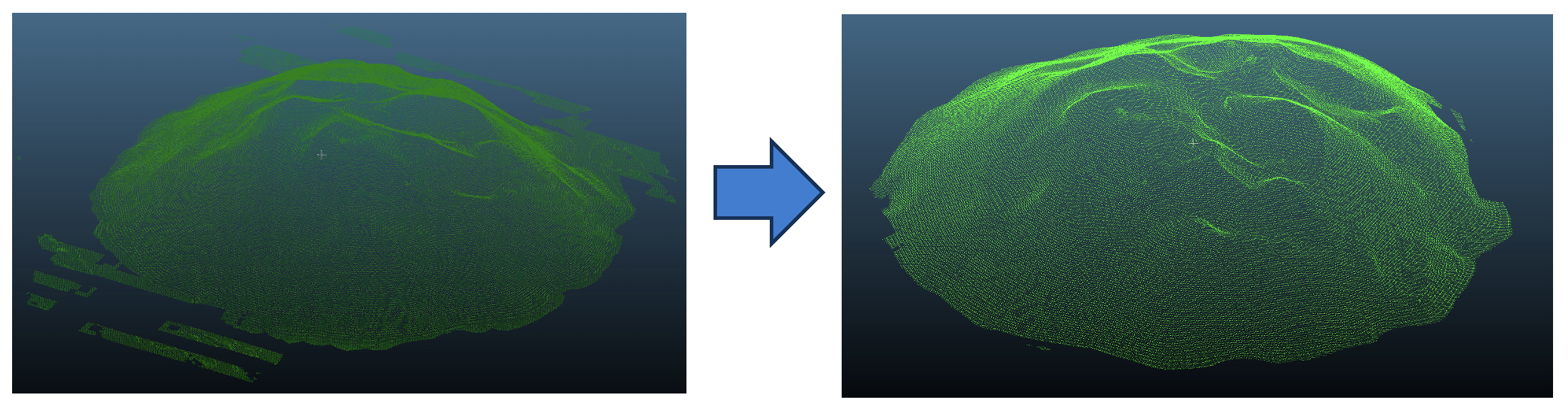

Outlier Removal

After semantic segmentation and target class data extraction it is required to do some post processing. Here, we need to remove outlier.

Fig 13: outlier removal

Fig 13: outlier removal

Fig 13 shows outlier removal of segmented target data. Removing outliers helps in calculating volume more precisely.

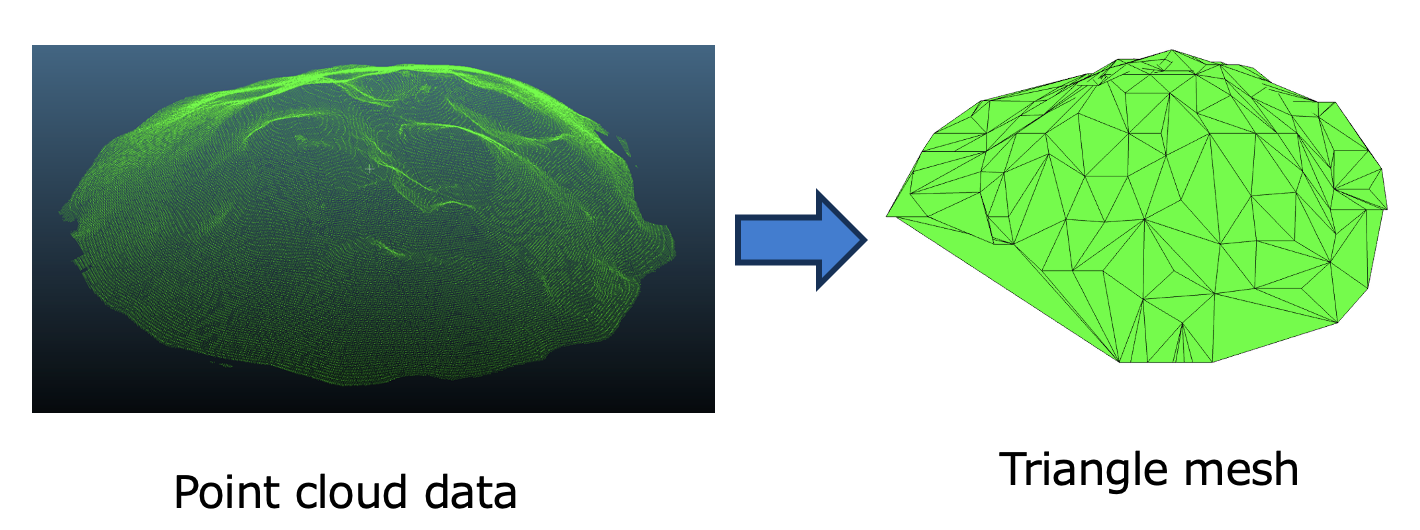

Triangular Mesh

It is not possible to compute the volume from a Point Cloud. So, a mesh is required. To create a mesh from point cloud data we can use surface reconstruction algorithms. Surface reconstruction is an ill-posed problem, meaning there is no perfect solution and algorithms are based on heuristics.

Fig 14: triangular mesh from point cloud

Fig 14: triangular mesh from point cloud

Fig 14 shows construction of mesh from point cloud data. A mesh is a set of interconnected triangles or polygons that describe the shape of an object. We have used Open3D lib to convert point clouds to triangular mesh.

Volume Calculation

From triangular mesh using Open3D we get surface. There are several ways to calculate volume from this surface. One of the approaches is to compute the volume of each triangle of the surface to the XY plane. Then just add the volumes of all the triangles to get total volume. Fig 15: volume calculation

Fig 15: volume calculation

Fig 15 shows the flow of volume calculation from triangular mesh. We have followed the above approach and computed the volume from triangular mesh.

Result

In our experiment there were total 11 wood powder point cloud files. We used 6 files for training, 1 file for validation and 4 files for testing. Each file contains ~300,000 data points. We used GCP server to train and test the model.

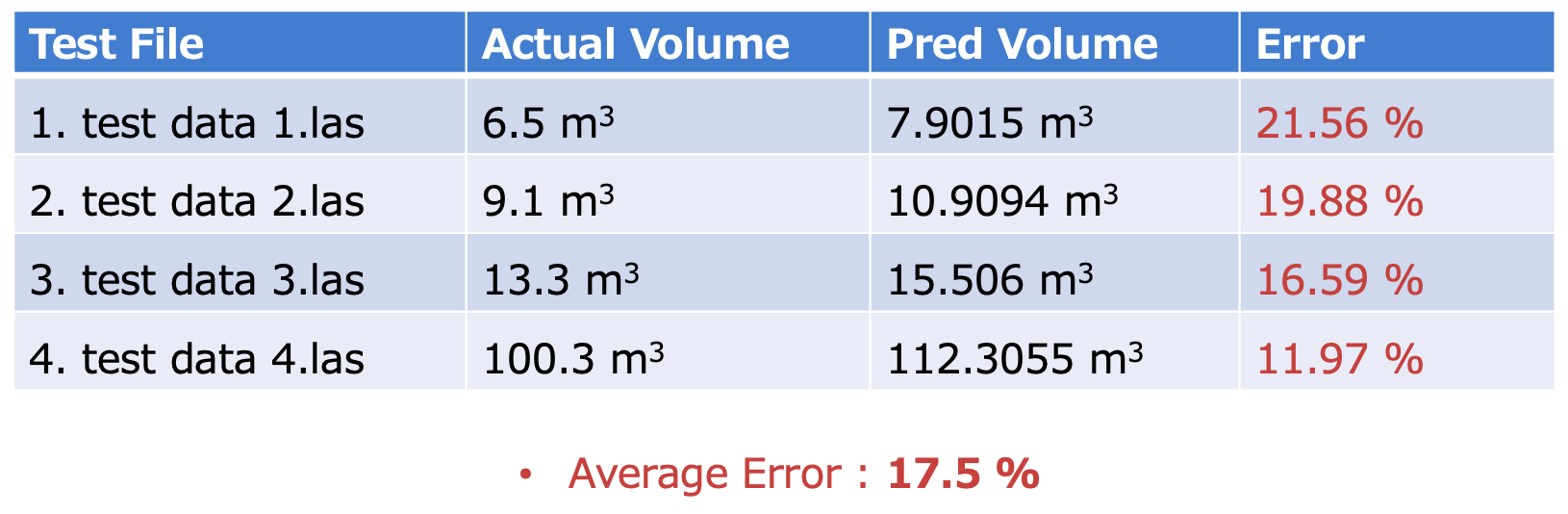

Table 1: test result

Table 1: test result

Table 1 shows result of our experiments. It shows actual and predicted volume with error. The average error is 17.5%. From the result table it is found that the pred volume is more than the actual volume. The reason is during segmentation due to lack of large data the mIoU was not more than 90%. So many outlier or false positive points exist. When we create triangular mesh, those outliers also exist. So more triangular mesh volume is calculated and when summed up together the overall volume of the wood pile increased. If there were more training data then the predicted volume would be much closer to actual volume.

Web App

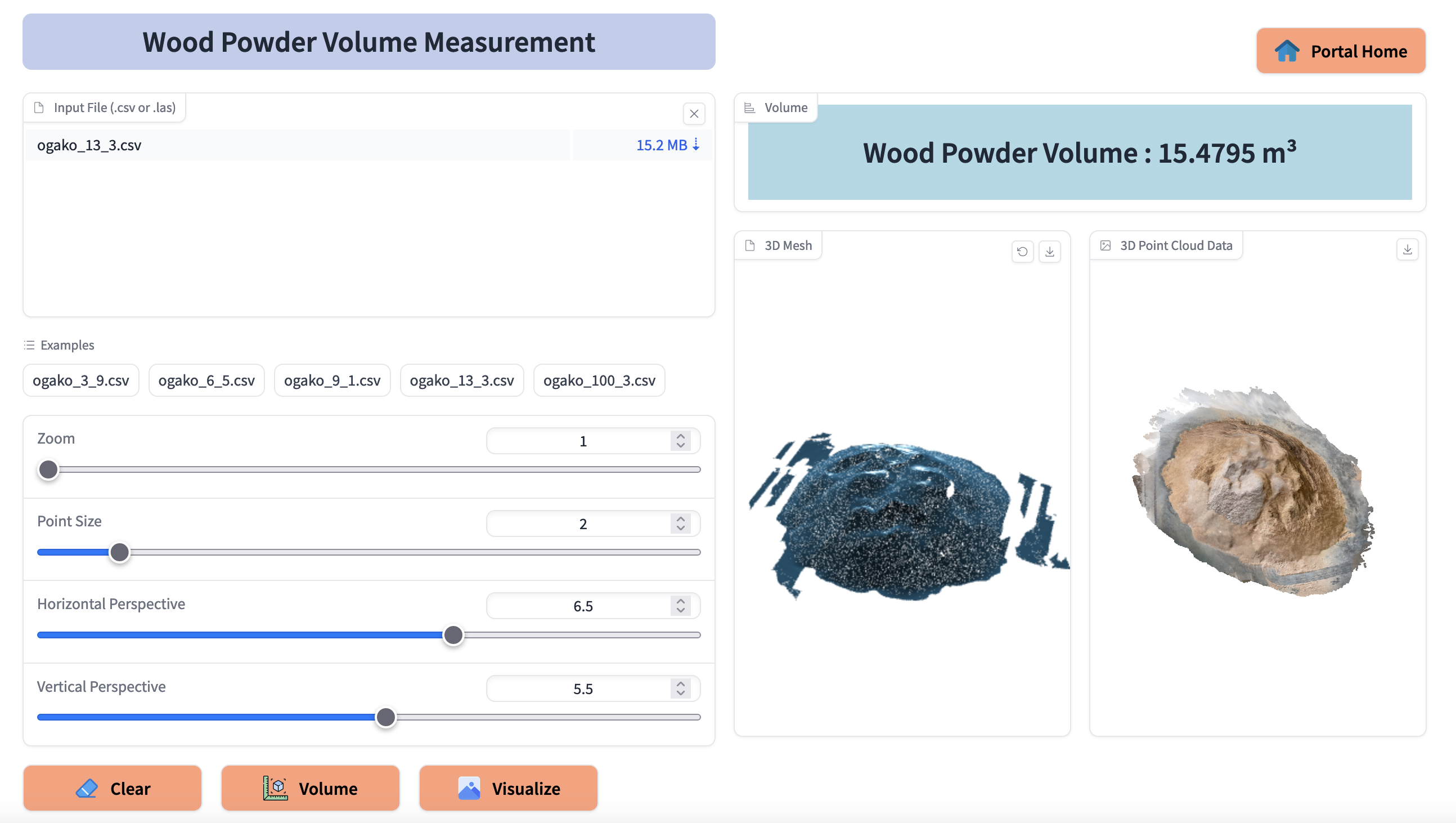

We have developed a web app using Gradio framework for wood powder volume measurement. The web interface is split into 2 parts. The left side represents the input panel and right side represents output panel.

Fig 16: Web app

Fig 16: Web app

Fig 16 shows the UI of web app. In the input panel we can upload ‘las’ or ‘csv’ file to calculate volume. There are also some sliders in input panel through which we can change the visualization of input data. In the output panel the calculated volume for input data is shown. It also shows the visualization of input data into 2D image and 3D mesh format.

Summary

The main target was to measure volume of wood powder which comprises of point cloud data. We have used PointNet model for point cloud segmentation. After segmenting target data we have created triangular mesh and measured volume of each triangle. Then summed up all volumes of the 3D triangles to get overall volume of wood powder. The segmentation result and volume estimation result were quite good. The segmentation result (mIoU) is 86.95%. Average error of actual and predicted volume is 17.5%. There were not so much data for training and testing. More data will help to improve model performance and volume calculation accuracy.

In this way, Chowagiken continues to conduct research and development on a daily basis with the aim of implementing AI in society. If you have any problems, please feel free to contact us.

[References]

- Point Cloud : https://www.dronegenuity.com/point-clouds/

- Triangle volume : https://www.mathpages.com/home/kmath393/kmath393.htm

- Mesh Volume : https://jose-llorens-ripolles.medium.com/stockpile-volume-with-open3d-fa9d32099b6f

- Open3D : https://betterprogramming.pub/introduction-to-point-cloud-processing-dbda9b167534

- PointNet : https://towardsdatascience.com/point-net-for-semantic-segmentation-3eea48715a62

- CloudCompare : https://www.danielgm.net/cc/

- Laspy : https://laspy.readthedocs.io/en/latest/intro.html

- Gradio : https://www.gradio.app/

- Docker : https://www.docker.com/get-started/

お問い合わせ・

導入のご相談

AI導入や活用についての

ご質問・ご相談はこちらから。

現状の課題やお悩みをもとに、

最適な進め方をご提案します。

資料ダウンロード

調和技研の事業や事例集をご覧いただけます。

AI活用の全体像を知りたい方におすすめです。